Research your AI research tools

This image was AI generated by Canva Magic Media™ (Pro) using the prompt: ‘army of little robots’.

Approximate read time: 10 minutes

Introduction

In 1999, I assumed a position as a research assistant at University College London (UCL) while pursuing my PhD. Situated in the corner of my new office was a tall grey filing cabinet with carefully organised copies of research papers relevant to our field. Fast forward 25 years, and that grey cabinet has long been obsolete. The research community has progressed so far from previous ways we approached research that it is slightly mind-boggling.

Safe to say, research and academia in all disciplines has been irreversibly changed by the exponential rise of artificial intelligence. While the media relentlessly tells us about AI’s progress and sensationalises its pitfalls, it’s important to remember that we are not in a sci-fi novel featuring sentient alien AI technology. We are in an era where AI is being built to mimic, emulate, and replace human behaviour, to varying degrees of success. This has important implications for how we conduct research, in particular.

‘Now more than ever, we need to understand the extent of the transformative impact of AI on science and what scientific communities need to do to fully harness its benefits.’ – Professor Alison Noble

Royal Society – Science in the Age of AI

Nonetheless, it is important to remember that machine learning has been around for ages. When I worked with deep-screened monitors and floppy discs during my research days, members of my research group were already producing training data sets to look for small changes in the noise to aid the diagnosis of skin cancer. We certainly weren’t the first to do this kind of research, either.

Like many technologies before it, AI has the potential to revolutionise research methodologies. Nonetheless, the same principles of good research practice I learned as a PhD student in the ‘90s need to apply.

Fundamentally, AI can be an incredibly powerful tool to aid the progress of scientific knowledge, but we must utilise it in a way that ensures research remains honest, transparent, accountable, respectful, and rigorous – the key tenets of ‘research integrity’.

The scale of AI in research

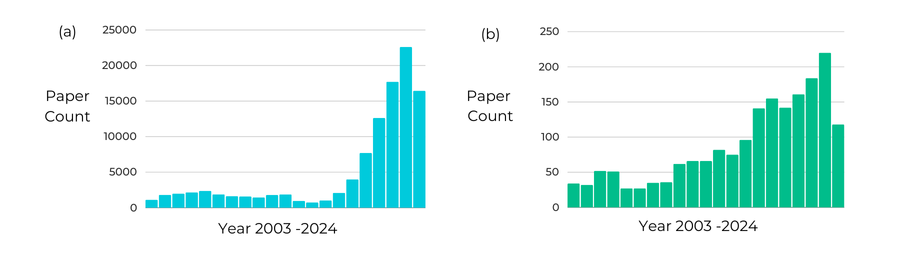

To get an idea of the scale and timeline of the adoption of AI in research, a basic PubMed search for the terms ‘Artificial Intelligence’ (searching all fields) shows a paper count of just 1130 in 2003. It has increased exponentially since then, amassing 16,453 counts in 2024 so far, with the sharpest rise in the last 6-7 years (searched on 25th July 2024, see Figure 1a). Only three papers, published recently in 2023 and 2024, included both the terms ‘artificial intelligence’ and ‘research integrity’. For curiosity’s sake, I have also included the paper count for the search term ‘research integrity’ on its own, which shows that interest in research integrity has been steadily increasing over the past 10 years, be it on a minuscule compared to that of AI (see Figure 1b).

Figure 1. PubMed search using the terms (a) ‘artificial intelligence’ and (b) ‘research integrity’, with paper count plotted against year, from 2003 to 2024.

We know AI is now part of the furniture, but what does that mean for research integrity? Research integrity is the umbrella term that refers to all the factors that underpin good research practice and promote trust and confidence in the research process. Five actions enable research integrity: honesty, transparency, accountability, respect, and rigour (see Figure 2).

Figure 2: What is Research Integrity? – UK Research Integrity Office (ukrio.org)

In this blog, my goal is to concentrate on the choices researchers now have with the proliferation of AI tools to streamline and enhance workflow processes during research; in particular, I want to look at the research integrity considerations involved in this decision-making process.

AI, your research assistant

The AI tools available to assist in research are ever-growing. There are now AI tools for:

- data analysis

- writing papers

- summarising research papers

- multi-document analysis

- checking your submission meets journal criteria

- image creation

- research poster design

- making presentations

- improving academic writing style

- automating literature reviews

- literature mapping,

- and more

AI has popped up in search engines’ home pages (e.g. MS Copilot) and platforms you may have already been using for some time (e.g. Canva). There are also AI search engines that have been developed specifically for academia.

Asking ChatGPT 4.0

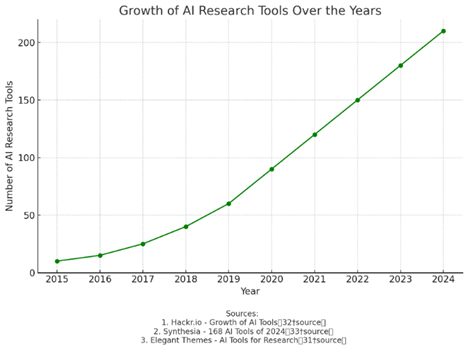

I asked ChatGPT 4.0 (free version) for a graph showing years versus the number of AI research tools (this question was asked at 11.41 pm 25/07/2024). It suggests that the number of available AI research tools has doubled over the past three years, and it produced a graph to showcase this.

The graph, ChatGPT explained, ‘show(s) the growth of AI research tools over the years. The data reflects a significant increase in the number of these tools from 2015 to 2024, indicating a rapid expansion and adoption of AI technologies in the research domain.’

ChatGPT claimed to use the following sources to produce its findings:

- Hackr.io – Growth of AI Tools (Hackr.io)

- Synthesia – 168 AI Tools of 2024 (AI Video Generator)

- Elegant Themes – AI Tools for Research (Elegant Themes)

Next, I asked ChatGPT, ‘How many AI tools specifically designed for research are available now?’ (This question was asked at 12.58 pm 25/07/2024). It responded:

‘As of 2024, there are numerous AI tools specifically designed to aid in research. While it’s difficult to pinpoint an exact number due to the rapid development and introduction of new tools, several sources list around 40 prominent AI tools tailored for research purposes. These tools encompass a variety of functionalities such as literature review, paper summarization, citation management, academic writing assistance, and research collaboration.‘

ChatGPT, one day later

One day later, I returned to ask ChatGPT the same prompt (11.50 am, 26/07/2024). In response, it said:

‘As of 2024, there are around 30 specialized AI tools available for research purposes. These tools cover various aspects of the research process, including literature review, data analysis, citation management, and writing assistance (Elegant Themes) (Hackr.io) (AI Video Generator).

For those specifically looking at AI tools designed for research, the market is growing rapidly, driven by the increasing need for automation and efficiency in the research process. For instance, Scite’s database now includes over 1.2 billion citations, highlighting the scale and scope of AI’s impact on research (Elegant Themes).‘

It is notable that in the span of 24 hours, the response from ChatGPT changed from 40 down to 30. While there may be good reasons for this that I am unable to verify, this raises questions about the accuracy and integrity of the tool’s outputs.

At a glance, the capabilities of AI research tools look impressive, especially ones that visualise literature mapping or synthesise summaries of papers. Part of me, however, is sceptical and wonders if this sales talk is true in practice, particularly with the lack of resources available to train researchers to utilise AI research tools.

Researcher attitudes to AI

A recent survey by Oxford University Press entitled Researchers and AI received 2,345 usable responses from researchers across the world[1] from various disciplines[2] on their use of AI in their research and recorded that 76% of respondents reported using some form of AI tool. Most reported using AI for machine translation (49%), while some used it for chatbot tools (43%) and AI-powered search engines or research tools (25%). Even from this limited sample, it is evident that AI tools are being well-utilised across disciplines.

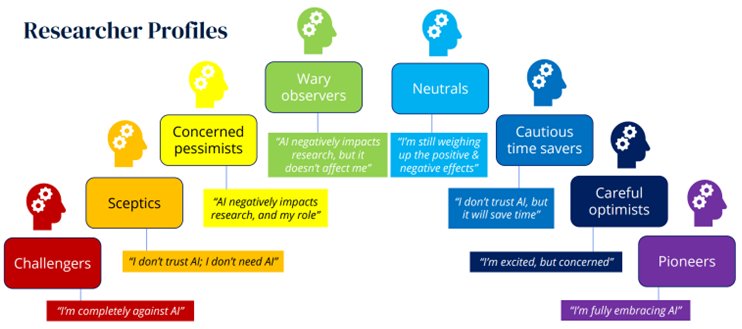

However, when these researchers were asked about their attitude toward AI tools, it was interesting to see a spectrum of opinions reported, from ‘Challengers – those fundamentally against AI‘, to ‘Pioneers – those fully embracing AI‘ (see Figure 3). From UK respondents, there was a relatively equal split between ‘Challengers’ and Pioneers’, with a slightly larger proportion falling in ‘Cautious times savers’, ‘Neutral’ and ‘Wary observers’ categories (however, this UK sample size is very small at n = 219). It is possible this distribution may indicate a lack of knowledge and confidence to utilise AI amongst the researcher body.

Personally, I likely fall into the profile of ‘Careful optimist’. How about you?

Figure 3: Screenshot from page 11, Oxford University Press, entitled Researchers and AI.

[1] The majority of respondents were from the US (46%) or UK (11%).

[2] The highest proportion was from the humanities (40%), followed by the social sciences (32%).

How do you know which AI tools are OK to use?

When deciding which AI tools are acceptable to use, a helpful rule of thumb is to distinguish between AI tools that assist and enhance your academic endeavours and those that replace them. Some instances where AI tools are replacing your academic endeavours could be considered breaches of research integrity and even serious breaches known as research misconduct.

Use your ethical compass when using AI tools – continuously ask yourself whether your conduct would be considered good practice (see UKRIO’s Code of Practice for Research for guidance), ask colleagues what they think, and speak to your research integrity lead. Please note this is especially important if you haven’t received any training on AI, as you may well be unaware or unfamiliar with its risks. It is incredibly important to seek out training and advice so that you become confident and competent to gain the benefits of these tools.

AI can be a very powerful asset in research, but you must be aware of its risks, including the threats it can pose to research integrity if not used appropriately. Some of these risks include:

- Plagiarism, such as using other people’s work or material without consent and appropriate acknowledgement

- Misrepresentation e.g., inappropriate claims to authorship

- Not meeting ethical standards e.g., lack of appropriate informed consent, risking individuals to harm or discrimination

- Lack of information privacy, protection and security (breaching laws and regulations, exposing research results before publication, releasing personal data)

- Lack of conflicts of interest management

- Infringing third-party intellectual property

- Lack of copyright and licence agreements management; most AI-generated content is not protected by copyright

- Contravening employers’ insurance

- Failing to meet funder conditions

Being a ‘cautious optimist’ may be a beneficial mindset for exploring the potential of AI – be open to AI’s possibilities but carefully consider its implications for research integrity.

To help you navigate decisions on the use of AI in research, I’ve outlined some questions you should ask yourself when deciding if an AI tool is appropriate to use in your research. Though this list of questions is by no means exhaustive, it could help you to reflect on some of the research integrity considerations that come with AI use. When navigating these questions, remember to consider the five actions of research integrity: honesty, transparency, accountability, respect, and rigour (Figure 2).

Guiding questions for using an AI tool as a research assistant

- Are you using the tool to assist and enhance your academic endeavours or replace them? If the latter, be cautious that this isn’t breaching research integrity (for example, plagiarism).

- Is there a significant benefit to using the AI tool (for example, will it save you time, streamline your processes, or improve the visualisation of your concept)?

- What limitations does the tool have that could cause a breach of research integrity (see list of examples of risks to research integrity above)? How will you address these limitations and/or mitigate risks associated with them?

- Does your employer already have a licence agreement for the use of the AI tool? If not, can they recommend a similar tool?

- Does your employer have existing guidance or processes for evaluating Al tools?

- Do the Terms and Conditions of the tool (if applicable) enable you to use the tool the way you want to? Do you understand them, or do you need to seek help to fully understand them and their associated risks?

- Do you need training on how to use the tool appropriately (e.g. using appropriate prompts?)

- If you plan to upload your research (data, text, code, images etc.), have you spoken to your governance team first about the associated risks? (Note: once information is uploaded you won’t be able to get it back easily or even at all. Risks of research integrity breaches could relate to ethical considerations such as lack of informed consent and lack of information privacy, protection and security).

- By using the tool, are you diminishing your opportunities to grow as a researcher by improving your critical thinking abilities, problem-solving, creativity, and storytelling skills?

- How will you track your use of the AI tool in your work? What would the best practice for transparently tracking and recording in your discipline look like?

- Are you familiar with disclosure of AI use in publications or other outputs that are relevant to your discipline?

- Did you know in certain circumstances, using AI is inappropriate as it breaches confidentiality (e.g. in peer review for grants and publications)? Are you confident that it does not do so in this instance?

- Are there standards in your discipline that you must follow when using AI (e.g. for writing a clinical review)?

- Are you assured you have given sufficient human oversight (e.g. have you checked you are not citing retracted or paper mills articles or checked for misinformation)?

About me

I am not an expert in AI nor an ethicist, but I am an experienced researcher who has worked on preclinical studies to improve drug delivery and a research integrity specialist working with the UK Research Integrity Office (UKRIO). I have extensive experience providing advice and guidance to researchers and other stakeholders about matters of research integrity.