EASE/UKRIO Peer Review Week webinar

How can peer review support research integrity?

EASE/UKRIO Peer Review Week webinar

Wednesday, 21 September 2022 2pm-3.30pm (UK time)

EASE (the European Association of Science Editors) and UKRIO joined forces to host an online panel discussion for Peer Review Week 2022. This year’s theme was “Research Integrity: Creating and supporting trust in research.” The video of the panel, an edited transcript, and the slides are below.

Over the last few years, there has been increasing attention to research integrity, and to the need to develop best practices and incentive structures that encourage and support integrity in research. Through its important role to evaluate and vet research works prior to their publication, peer review is one of the steps that can support awareness of research best practices and compliance with integrity requirements.

Peer review plays an important role in researcher education, for example by fostering use and compliance with reporting guidelines. Peer review can also identify ethical concerns about the design, completion or communication of research. But can we expect peer review to detect all possible deviations from rigorous research practices? Where does peer review fit within broader steps by different stakeholders to drive integrity in research?

In this Peer Review Week webinar, speakers bringing the perspective of journals, researchers and institutions shared their experience handling integrity cases and discussed the role of peer review in driving integrity and trust in research.

Thank you to Iratxe Puebla of the EASE Peer Review Committee for co-organising the panel, EASE Secretary Mary Hodgson for hosting and running the webinar, EASE President Duncan Nicolas for editing and uploading the video, and UKRIO Senior Research Integrity Manager Josephine Woodhams for helping with the Q&A.

Introduction by James Parry, Chief Executive of UKRIO (slides).

Matt Hodgkinson, Research Integrity Manager at UKRIO and EASE Treasurer, chaired the webinar with contributions on the panel from:

Gowri Gopalakrishna, Amsterdam University Medical Center (slides)

John Carlisle, ‘Data detective’ and NHS anaesthetist, Torquay

Magdalena Morawska, Research Policy and Governance Officer, UCL (slides)

Caroline Porter, SAGE Publishing and COPE Trustee (slides)

00:00 Housekeeping – Mary Hodgson

02:21 James Parry – Introduction to research integrity and UKRIO

14:15 Matt Hodgkinson – Introduction to the panel

16:03 Gowri Gopalakrishna – National survey on research integrity

24:15 John Carlisle – Experiences editing Anaesthesia

31:40 Caroline Porter – How can peer review support integrity?

38:00 Magdalena Morawska – Better research and research culture

44:30 Panel discussion and Q&A

The session opened with a presentation from the UKRIO Chief Executive, James Parry, discussing the concepts of research integrity and the work of UKRIO. The transcript below has been edited for clarity and to add images and links.

James Parry:

What does peer review mean for the research community? Why does it matter, what are its strengths and weaknesses, and what are the different approaches to peer review?

Peer review is a communal activity. We often think of peer review as something done to us when we submit our research – when we put our heads above the parapet – and decide to disseminate our outputs, our conclusions, our findings, and then these mysterious cabals of peer reviewers have a look at our work and they decide whether it passes muster or not, but actually peer review is done by the research community. It’s unpaid labour, sometimes it’s anonymous, sometimes it’s identifiable, but we as researchers make up those peer review panels. So knowledge of peer review, knowledge of how to do it as well as how to have it done to you is really important. The very concept of peer review, in all its myriad forms because there are lots of different ways of doing it, is really important for the honesty, accuracy and reliability of research and therefore for public trust in research, for research integrity.

At UKRIO we advise on anything to do with good research practice. How to get it right, what to do if things go wrong. We support individual researchers and organisations throughout the UK, covering all subject areas of research. So what is research integrity?

“Most people say that it is the intellect which makes a great scientist. They are wrong: it is character.” Albert Einstein

“Excellence and integrity are inextricably linked.” UK Concordat to Support Research Integrity (1st edition, 2012)

“In general terms, responsible conduct in research is simply good citizenship applied to professional life… However, the specifics of good citizenship in research can be a challenge to understand and put into practice.” US ORI Introduction to the Responsible Conduct of Research (2007)

There are a few ways you can view it, but in many ways it can be viewed as synonymous with good research practice on a variety of levels. It’s about ensuring that your research is of high quality and high ethical standards. It’s about doing the best research you can. It’s about you as a researcher reflecting on the challenges you face and pressures you face in trying to do good research and how to overcome them.

It also goes beyond the level of individual researchers or even research teams and projects, it’s about the research community thinking about the challenges and pressures facing all researchers and what we can collectively do to overcome them. Whether the way we fund, carry out, assess, and disseminate research improves or harms quality and ethical standards, often described as issues of research culture or “publish or perish”.

This isn’t me being aspirational or speaking in a vacuum, these are topics being considered by governments, by research funders, by publishers, by major research organisations, and there’s a huge amount of discussion within the academic press, within academic journals, and throughout the research community. This is very much a topic that’s on everybody’s minds, and that’s I think because of a background of growing questions about the overall reliability of research. A growing interest in whether researchers are getting things right or wrong, and why. It’s worth noting that all the challenges on researchers have been increasing recently because the pandemic has put pressure on all sections of society, and the research community isn’t immune to that.

The UK, taking one country on its own, has a justified reputation for producing high-quality research, ethical research, and high-calibre researchers, but no country is immune to mistakes, to sloppiness, and to research fraud. Without trust we can’t do research, and to have trust we and our research must have integrity, and that’s why it’s important and that’s why it applies to everyone involved in research and we all have a role to play in this.

Key elements of research integrity are:

- Honesty, Rigour, Transparency and Open Communication, Care and Respect, Accountability

UK Concordat to Support Research Integrity (2019)

Key themes:

- All disciplines

- All career stages

- All elements of your research: from beginning to end

- Enabling research, not restricting it

- Safeguarding trust in research

- ‘No such thing as failures, only setbacks’

Research integrity basically means good practice. Meeting basic standards for your research regardless of whether they’re disciplinary standards, regulatory or contractual standards, what your supervisor or team leader says, guidelines, institutional conventions, ethical approval conditions. Adopting best practice on research methods, on managing your data, on consent, on dissemination and authorship, and this is something that applies to all disciplines – the specifics will differ depending on discipline or sub-discipline – and it applies to all career stages, from the moment you start out in a research career to the moment you retire and get to put your feet up for the first time in 40 years. It applies from the moment you come up with the idea or theory for your research, to the moment you disseminate it and move onto something else. This is something to be done actively and continuously, throughout the lifecycle of your research. This isn’t just something you think about at the beginning, when you consider do I need ethical approval or not.

The good news is you’re probably doing a lot of this already. This is part of the everyday professional good practice of being a researcher. It isn’t something other, something alien, this is working out what good research practice means for you and thinking about it perhaps a bit more than you currently do.

This is the key message: you’re not expected to treat yourself as a researcher as a set of errors waiting to happen, or a potential research criminal, or to straightjacket yourself, this is not what this is about, this is about reminding yourself that integrity, good practice, ethics, basic standards, applies to you and your research regardless of your discipline, regardless of whether you have external funding or not, regardless of whether you have human participants or not, regardless of whether you need ethical approval or not. It’s about working out what good research practice means for you and your discipline, for your particular research project in your particular research environment, and remembering that the rules of the road for research aren’t set by researchers themselves but set by others and they change and evolve. There can be a fine line between acceptable practice and unacceptable practice, between a correct research method and some that’s not right in the situation and has been discredited, so we need as a community to be quite self-reflective in our practice and otherwise problems can occur.

UKRIO has been running an Advisory Service since its inception in 2006 and we help individuals and organisations get things right and help them when things goes wrong.

- A recurring theme from UKRIO: problems occurring because of overconfidence, bad habits or a failure to get help – serious problems can be avoided with a bit of foresight.

- Awareness and training: researchers need to be encouraged to be self-critical and there should be no stigma attached to asking for assistance.

- Organisations need to support their researchers in this.

- Prioritise culture/ leadership and improving systems/ incentives and recognise this is long-term work.

A recurring theme we’ve seen with challenges to good research practice is that people fall in love with a theory or an idea: “this is brilliant, all we need to do is prove it”, or “this will be so good once we’ve done the study and written it up”, and that can be the start of a very slippery slope. Serious problems can sometimes occur because of overconfidence or difficulties in individuals speaking up and saying what they’re grappling with. We think that it’s really important that, to avoid mistakes and to improve quality and standards and ethical practice, we should really collectively be self-critical, reflective about what we’re doing in terms of our research and what’s the best way to do things. This isn’t a burden that should be put on researchers alone; organisations need to really help in inculcating cultures of research, environments where people can talk openly about successes and challenges that they’re facing where there’s no stigma attached to asking for help.

I think as researchers we’re a profession that discovers stuff, or finds out stuff, or invents stuff. I think with that there can often come a pressure that we should know what we’re doing, we should be able to figure out the answer to any problem. Actually, just like any profession in the world, we’re not immune to mistakes, we’re not immune to moments of self-doubt, we’re not immune to pressures and negative incentives within the organisations and systems in which we work. So it’s really important for individuals and organisations to recognise that it’s OK to not know what to do, it’s OK to ask for help, and organisations must be supportive. A lot of this is about cultural leadership and improving systems and incentives. Some of this will come from the top down; with others it’s more a case of collective action, working together, and that’s really important.

“Peer review is defined as obtaining advice on manuscripts from reviewers/experts in the manuscript’s subject area. Those individuals should not be part of the journal’s editorial team. However, the specific elements of peer review may differ by journal and discipline…”

Going back to what I said in the introduction, peer review is a collective activity by the research community. It’s one of the checks and balances for research dissemination. Various types of peer review have different strengths and weaknesses and peer review as a concept has been subject to an awful lot of debate over the years as to how it can be made better. I think we’ll always be looking for that perfect solution, but I think it’s also important to recognise that every one of us has a role to play in this – in carrying out peer review, in improving it, enhancing it, learning from the process – whether we’re having our submissions reviewed by others or whether we’re acting as reviewers, because this is a collective activity by the research community to help safeguard and enhance good research practice.

Gowry Gopalakrishna:

I wanted to start by reminding us of Robert Merton’s proposition of scientific norms, sometimes affectionately known as CUDOS. He proposed the following four norms in the 1940s. Suffice to say they have been debated and contested since then, but I feel as a research integrity meta-researcher and open science researcher that these four norms fit very succinctly with what we are attempting to achieve in research integrity, and also in peer review. The four norms essentially talk about: communality, that science is a product of social collaboration assigned to the community; universalism, which speaks to the acceptance or rejection of claims, needing to be independent of any personal or social attributes; disinterestedness, where researchers should be motivated by identifying the truth rather than other motivations, which may be selfish in nature or not, that is to be debated; and organised scepticism, my personal favourite, and probably the one that’s most relevant to the topic today, which is the ability to verify data and scrutinise others’ claims, being critical for the credibility and progress of research.

| Communality | Science is a product of social collaboration; assigned to the community; >> Secrecy is its antithesis, full and open communication its enactment |

| Universalism | Acceptance or rejection of claims is independent of personal or social attributes of its protagonist >> Research findings are fundamentally “impersonal” |

| Disinterestedness | Researchers should be motivated by identifying the truth rather than (selfish) professional or monetary motivations |

| Organized Skepticism | Ability to verify data and scrutinize others’ claims is critical for research credibility and progress |

In the last four years, I have been busy leading a study looking at the prevalence of three different types of research behaviour, as well as some associated factors that might help us understand what drives these behaviours. This was the Dutch National Survey on Research Integrity. We had roughly 7000 respondents and what we found is that questionable research practices (QRPs) and research misconduct, also known as fabrication and falsification, happen still too frequently. One in two researchers self-reported as having frequently committed at least one QRP and when it came to falsification or fabrication of data or both, this was about one in 12 researchers. Given that these estimates are self-reported, we tend to believe that the true estimates are likely to be higher, placing even greater importance on the need for better research quality. We also tried take a step further in trying to understand which associated factors might be driving these behaviours of questionable practice and research misconduct. Interestingly, what you see are two factors that I want to highlight among the others that we found. What we saw was that subscription to these Mertonian or scientific norms is still very much alive among the 7000 or so researchers that we surveyed. There was a significant association between subscribing to these norms, and a lower likelihood of engaging in questionable research practices and research misconduct, suggesting that the revival of these norms in our community and research cultures may be important. Additionally, the other factor which had the biggest effect size in lowering the likelihood of research misconduct, was the perceived likelihood of detection of such behaviours by reviewers. In other words, this seems to tell us that researchers still believe quite strongly in the strength of peer review as an important means to safeguard research quality.

| Prevalence: QRP/FF | Prevalence (%) |

| Any Frequent QRP (at least one QRP with a score of 5,6,7) | 51.3 |

| Fabrication (making up data or results) | 4.3 |

| Falsification (manipulating research materials, data or results) | 4.2 |

| Any FF (either fabrication or falsification or both) | 8.3 |

But peer review as we know it tends to get a bad rap and I would like to submit a suggestion that perhaps this is because it’s not set up in the best possible way to support research as a collaborative effort, research as needing to be open and transparent in order to ensure accountability and trust – especially as we move towards open science and the uptake of open science practices – and research as a scientific dialogue of sorts and at best a proposition of the truth. So we are back to the proverbial carrot or stick story. If we indeed see and value peer review as a means to safeguard research integrity, then why aren’t we recognising and rewarding that behaviour accordingly? So to this end, a few suggestions: firstly, I think that it is important to recognise and reward peer review, be that extrinsically through promotion and tenure tracks, or intrinsically through rewards within a research group or department, highlighting and praising good reviewers and reviews; secondly, I think it’s important that the review of research should be happening – and I think we all agree on this – that it should ideally be happening at all stages of research and not only at its end, and although this is changing, it’s happening quite slowly. It should become a norm rather than an exception, which in a way also goes back to my earlier point on rewarding and recognising the behaviour that we want to see in research and in our research community. Certainly, peer review is about critique, that is something that we are all very well aware of. That’s hard even for the best of us, especially to receive. So I believe that training of researchers in peer review, in good peer review and good peer review practices, should be part of education: how to peer review and what standards to go by. Perhaps this could even be a journal commitment to collective standards, similar in a way to reporting standards. Now, while journals may already do this, from my personal experience as a peer reviewer and as an editor know that the quality tends to vary greatly. A final note here is to make a push for going broader in our definition of peer review, especially with the advent of open science. I’d like to particularly emphasise civil society, that science or scientific education is equally necessary and important in being able to assess the credibility of research, whether it is in a journal, or a preprint, or a study protocol that is shared through open science practices. We need to be able to address the need for scientific education on a broader educational agenda.

Matt: I love the idea that researchers have to commit to addressing criticism.

John Carlisle:

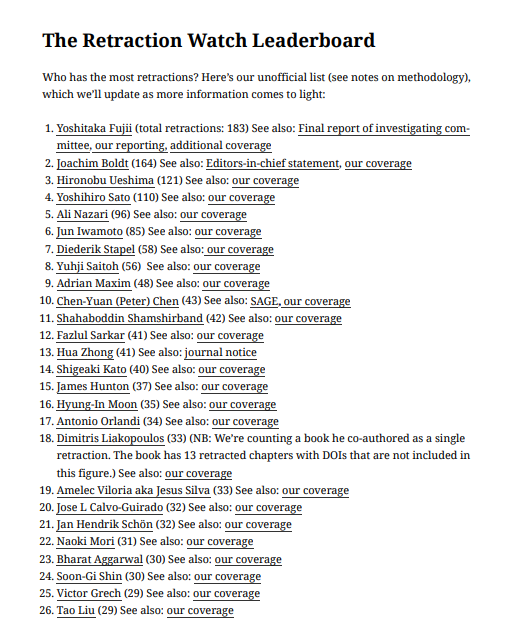

I’m an editor for a journal called Anaesthesia and before that I was an editor for The Cochrane Collaboration. During my work for both, I’ve come across falsified data. The first was by anaesthetist called Yoshitaki Fuji, who has approximately 183 randomised controlled trials retracted. You can look on the Retraction Watch leaderboard to see how many papers have been retracted from various authors and he’s currently number one on that list. The rest of the list have got quite a few other anaesthetists. So either we, as a clinical specialty, lie a lot or we’re just not very good at it and we keep on getting found out [Matt: or anaesthesiology editors and journals are making a particular effort to clean up the published literature]. The take-home messages from me are about 1/3 of randomised controlled trials submitted to my journal have sufficiently falsified data that they shouldn’t be published, so about one in three papers. I then followed up those papers and most have been published. So I’d guess that if our experience in Anaesthesia is replicated across all journals, then maybe about one in three randomised controlled trials that you read are fabricated, possibly more, and one might imagine that that would apply to other types of papers as well. So I think the extent of the problem is huge. From an ethical point of view, the focus for me is the patient, who’s the ultimate consumer of good or bad clinical practice, which often is based upon research. Obviously, if the research is faulty so is the practice, potentially imperilling the lives and health of the patients that I help care for.

The opportunity for a peer reviewer to identify fabricated data is limited. If you just look at the paper as supplied to you as a peer reviewer, you’ll identify probably about two out of 100 papers you review as having falsified data. If you have access to individual patient data, large spreadsheets and numbers, you’ve got a much richer data source from which to identify falsified data, and that will increase your ability to identify falsified data about 20-fold, taking your identification of false trials from somewhere between one and two in 100 up to around 30 or so in 100. Elisabeth Bik may be a name you’re familiar with: she identifies falsified data or at least copied data in the form of Western Blots, histology slides, other data rich environments. The problem with the papers that you’ll generally review is that they have insufficient density of data for you to identify falsehood. So, my recommendation is that you might start requesting individual patient data from the authors of papers you’re peer reviewing or editing for. It’s a big job. It takes a lot of time. I’m more than happy to help other people look for falsified data, but I have limited time myself as a full-time clinician. I’ll try now if I can, I certainly have some tips of how you might go about looking at spreadsheets. A couple of appendices in the papers I’ve published have examples of spreadsheet data in which I’ve identified falsified data.

Matt: Is there any progress in the case of non-random sampling in the over 5000 RCTs in anaesthesia and general medical journals you’ve discovered?

John: Have any further trials been retracted? So the main headline trial that was retracted after I analysed those trials was a study in the New England Journal of Medicine called the PREDIMED trial, which is about Mediterranean diets. The NEJM took that particular paper quite seriously and they looked quite closely at 11 of the least likely data distributions of the couple of hundred papers I analysed from that journal. Of the others most had false data, but could be corrected, so they had a few corrections as well. The other journals, JAMA and four anaesthesia journals, I don’t think have looked systematically at the papers I highlighted. So no, I’m not aware of any particular progression on those. But as I said, looking at summary data, which was the analysis in particular paper, it’s a very blunt tool for identifying false data, it’ll only pick up a few. I didn’t say they had false data, I just said they had unlikely distributions. As I said, you need a richer data set, which involves the authors looking back at the study and how it’s conducted and realising they made an error. An unlikely distribution doesn’t mean people have made stuff up, although it’s a possible cause of false data, but it does make error or intentional falsehood fairly likely.

Caroline Porter:

I’m really thinking about peer review and research integrity from the lens of publication ethics. As I see it, the primary aim of peer review in this context is is about building a credible body of knowledge and the purpose of peer review in that context is to advance scholarship. As others have said, it’s an incredibly important system of checks and balances. It is of course, an ecosystem of publishers, journal editors, researchers, peer reviewers, funders, academic institutions, and industry groups: all have a part to play in making sure that this system works. So I wanted to share a quote from Professor Karin Wulf. To my mind peer review is an imperfect system and it is subject to bias, misjudgments, and human error. However, it is a vital part of quality control and the system of scholarly knowledge production would be weaker without it.

I think the key thing here is that peer review is never meant to be definitive about whether the research and the interpretation of the research sources is right or wrong, per se. It simply indicates that a group of experts have deemed it valuable enough to fund, or publish, or earn a position for the researcher. In this excerpt Karin Wulf also alludes to dimensions of bias that can play out in peer review and I think that all the stakeholders need to be mindful of, and seek to address, that bias in order to create a more equitable platform for scholarly communication.

COPE’s peer review guidelines provide basic principles and standards to which all reviewers should adhere during the peer review process in research publications. A couple of key points here from that guidance emphasise the importance of the role, but also the extent to which it relies on trust, on personal and professional integrity, and on goodwill. Peer reviewers really play a central and critical part in the production of scholarly knowledge, but too often they come to the role without enough guidance or support around their obligations. Reviewers also have other demands on their time, meaning they may not have the capacity to be as thorough as one might like them to be in an ideal world where we all have enough time for everything.

The Research Integrity Ecosystem:

- Researchers – authors

- Researchers – reviewers

- Journal editors

- Publishers

- Universities

- Funders

- Industry bodies (COPE, UKRIO, EASE, STM)

- Readers

Research integrity is really a shared endeavour across multiple stakeholders. Publishers exist within an ecosystem and there needs to be collaboration and coordination across all parts of that system towards a common goal of credible knowledge production. We should also talk about why misconduct or mistakes happen in the first place. And it’s been alluded to by James in his introduction around this publish or perish culture, and the question of how we can incentivize good behaviour and research. It’s not just publishers. It’s not just institutions. It’s each part of this ecosystem working together in partnership, being willing to share knowledge and experience, admit their mistakes, and work to improve. For me as a publisher, it’s worth saying that we sit towards the end of the research cycle so our role in supporting research integrity will often mean that we act as gatekeepers rather than participating in prevention. We can help set standards, provide processes, provide training and support, but funders and institutions are better placed to drive incentives, motivation, and overall research integrity from the outset.

Finally, I just wanted to point towards a few resources. SAGE offers a free webinar on how to be a peer reviewer, COPE also provides a wealth of materials, free resources and guidance, including ethical guidelines for peer reviewers. There’s the STM Integrity Hub, which is an important cross-publisher initiative. It’s a new initiative and I think it’s a great opportunity for publishers work together to address, detect and prevent various types of fraud or misconduct. And finally, SOPS4RI, or standard operating procedures for research integrity. This is an organisation that has built what they’re calling a toolbox, which is easy-to-use standard operating procedures and guidelines for research performing and research funding organisations.

Matt: It’s always worth remembering that peer review is just one part of the research integrity ecosystem and thinking of how it all links together is really important.

Magdalena Morawska:

It’s really interesting to see this focus on research integrity and how peer review can support it, and I hope I can show that it’s a two-way street. To start, I need to take a step back and think again about what research integrity is. We need to again try to ask the question, why is integrity important? What’s the purpose of research integrity: the actual purpose is to deliver high-quality research that can be trusted. If you think about peer review, while there is much criticism, I think most people still agree that that’s still really useful to help evaluate the research and establish the quality of it. And thinking about the purpose, actually the purpose of peer review is also to deliver high-quality research that can be trusted. So here, research integrity and peer review have the same purpose and I do think it’s a two-way street. I think peer review supports research integrity, but research integrity can support peer review too.

There are many different definitions of research integrity, but I what’s useful is to think about it in terms of core elements, or principles, or some call them values. Here in the UK, we have the Concordat to Support Research Integrity that outlines those principles, but in Europe you have the ALLEA European Code of Conduct for Research Integrity, which outlines similar principles, which generally include honesty, rigour, care and respect, accountability, transparency, and open communication. These apply to all stages of research, to all stages of the research lifecycle, and I think this can be useful in peer review too.

UKRIO summary of the elements of research integrity

I think most researchers, if not all really, find themselves at some point or other on the receiving end of comments, and it’s not easy to deal with criticism. We all been there. It’s really hard. But how could the principles help us do it with integrity? This is just an interpretation of those principles, it will depend on personal interpretation of those values, but for example if you think about honesty. Look at the comments honestly: is there a point worth considering that you might have missed or an error you made? Rigour: check and recheck your data and make sure you really answered all the comments. Open and transparent communication is always really important. When you disagree with comments of the editor or the reviewer you can raise this, but keeping in mind care and respect, the respect for their work and the time they put in. We need to remind ourselves about the language we use in such communications and make sure it’s respectful. Accountability is particularly important for integrity. As a researcher producing research, producing those outputs, you want to publish the best quality research and peer review is an opportunity for you to be better, to learn and make your research better.

Now, how are those principles applied to being a peer reviewer? Let’s think about honesty. Obviously, you need to look at both the positive and negative parts of the work. There is a personal element of honesty and knowing your own knowledge and its limitations, asking yourself, are you actually the right person to judge the whole work and all of its aspects. Rigour, that’s obvious, pay attention to detail, but then we come to open and transparent communication. Communication is a two-way street. You can be honest about your opinions, but if you see feel there’s some problems with the work you can raise them and you should raise them. But also you should be honest about your personal potential conflict of interest. Communicate with editors so they are clear on what’s happening. Going back to care and respect for all of the people involved in this, editors or reviewers, make sure we use appropriate, respectful language when we do it. When being a peer reviewer there is actually quite a big accountability. The personal responsibility involved in doing peer review is because when you’re doing it, you are contributing to general knowledge and a stronger research community. That’s a really big responsibility.

So to finish up, I think peer review is extremely important in evaluating and producing high-quality research, and it can support integrity, but research integrity can support peer review, and together they make research, and researchers, and the research community stronger.

Matt: I like the turning of the question on its head.

Panel discussion

Matt: We are going to try to stick to the angle of how peer review can help ensure the integrity of research rather than the integrity of peer review, but will probably stray into publication ethics as well so let’s not worry too much, but we’ll try and keep ourselves on theme anyway. I’m going to start with a question which John has touched on already, but it is: can we expect peer review to detect misconduct?

Caroline: I would answer that by saying that, as I alluded to as well, peer review isn’t ever going to be a perfect system. The aim with peer review is to sort of check the validity of the research. It’s important to guide reviewers on what the expectations are around their inputs. It’s designed to pick up errors, mistakes, provides guidance, recommendations and suggestions to the author. It isn’t really designed to detect deliberate misconduct, for example falsification and fabrication of data. Some of those are very, very difficult to detect. Peer reviewers are not necessarily always going to have the tools and the expertise to pick those up. It’s a really, really essential part of the process, and it strengthens the validity and the credibility of research, but it’s not reasonable to rest the burden of that detection solely on the shoulders of peer reviewers, although they’re certainly important to the process.

Madga: Coming from a research integrity perspective, what is research for, what is it important, why do we do it? For me, it’s all based on trust, right? Researchers need to trust each other to do research, because they usually build on previous work. Similarly, peer reviewers need to approach papers from a trust point of view because if we don’t trust anything we see, we question everything, how can we move forward? I absolutely respect what John’s doing, going through data. I think that’s amazing, an enormous amount of work. But the reality is not everyone is able to do that. And even John said, this is a massive time commitment. But I think there’s a second part of it: on one hand, I think there’s trust, I would expect peer reviewers to trust in the research they’re reviewing, but the second issue is training. Peer reviewers actually don’t get any formal training on being peer reviewers, but now you’re expecting to add another layer of checks, for publication ethics or misconduct issues. Have they had training in those to know even what those are: if you don’t know what those are, how can you recognise them? So I feel like it’s a bit unrealistic to expect peer review to detect misconduct.

Gowry: I think peer review as it’s currently done is unlikely to detect misconduct. However, it was interesting that in our survey, the researchers tend to actually believe that peer reviewers have the ability to detect misconduct and the association that we found was that that belief, that perceived likelihood, did reduce the tendency to then engage in research misconduct. So that’s actually really quite interesting, that as a research community, I think we tend to value peer review as being able to then really spot fabricators and falsifiers when in reality actually it’s not able to in the way that it is set up. However, I do want to also say when we talk about peer review, are we talking about journal peer review? I mentioned that we are in this context, that we are talking about journal peer review. COVID-19 has shown us something slightly different. What’s happened in COVID-19 is that we have seen the social media community of researchers and others calling out research that has been falsified and fabricated. There are some amazing examples there. There have also been heated discussions on how different research has been conducted. So, I think that we need to define in which context – I think in the journal context, I don’t think that it is currently set up in a way to allow detection of research misconduct, but I do think in open science, where we are sharing preprints, we are also sharing study protocols, and we are sharing datasets, that there is probably a better tendency to be able to detect research misconduct, not necessarily by journal peer reviewers but by the larger scientific community.

Matt: It’s an interesting point you raised there, about how there’s the unintended consequence that if we are honest about the limitations of peer review and catching misconduct, then it might incentivize people to commit more misconduct. Let’s hope not.

John: Well, obviously peer review can detect fraud and fabrication, because I’ve done it and lots of other people have done it. It’s clear that often it doesn’t because then it’s identified after publication. You don’t get to hear about the identification before publication. It should do better than it does before publication, because some of the instances of data fabrication are really quite dumb. So those are the people we tend to spot are the people who are not very good at telling lies. They tell fairly facile lies and you can spot unnatural, almost impossible distributions on graphs, that are apparent as soon as somebody points them out to you. I think peer review should do a better job than it does. It certainly can’t identify all cases and as I mentioned earlier, you find only maybe about one out of 20 false papers where you identify them just from the summary data – at least that’s me, other people might be able to spot a greater number, I don’t know. So, it can, it should, do better than it does. But no, it will identify a small proportion unless we have access to much more data.

Matt: Would sharing raw data during submission be effective and would peer reviewers look at it? Many journals are moving to have open data and data sharing statements, although these often don’t require that the raw data is actually available during submission. PLOS have started asking for raw Western Blots and some publishers have gone down that route as well. Can we have raw data shared during review, would reviewers look at it, would it be fruitful?

Magda: For journal review, when you have one, two, three reviewers it might not be able, but when you have community review, the research community has a much bigger opportunity to spot it and it does, and we know that post-publication review, whether official or not official, happens and it’s actually really good at spotting those things. I’m interested to see in the future, now that we have more preprints and these are available much earlier on, and I will be interested to see whether those issues come up earlier, because of how many eyes are looking at them.

Gowry: It’s really good – not just good, but really vital – for journals to move towards the model of asking for the raw data rather than it being a check-box exercise, which it currently mostly is. In most instances, you’re only asked to declare – and I think it was a Nature piece which recently reported that a staggering percentage, I think it was up to 90%, where data statements were filled in, but then it was less than 10% when authors actually did respond to the request asking for data. These numbers are very worrying. We definitely need to see how can we move to a model where the raw data is supplied at the point of submission. That’s the first part of the story, but I think there’s also the follow-up consequence of having submitted this raw data: are the peer reviewers going to have the expertise to be able to look at this raw data and be able to make sense of it, and secondly, do they have time to be able to do that? I definitely would be the proponent of going for the raw data. I think that clearly, hands down, would help peer review in supporting research integrity, but how will we deal with the consequences of supplying that data, having the right expertise and the time to be able to actually assess whether the analysis has been done in the way that it should be done? So I think there are a number of follow up questions I don’t have the answers to.

Matt: This is chimes with the question of whether it would be helpful to have an expert or sleuth on the journal editorial panel to help check and verify studies. Many publishers now have research integrity teams, such as I used to to head, but we certainly didn’t try to verify every single study. Certainly I’ve done similar checks to John on raw data and it is extremely laborious: I’ve done digit checking, attempted to use Benford’s Law and things like this.

There was a study that was published just last week that showed that peer review increases the statistical content of articles, that articles that are desk rejected have less statistical content, and that articles are rejected more when reviewers focus on statistics. Does this show us a glimmer of hope that peer review is working to help improve research integrity?

Caroline: I think there are glimmers of hope, and actually I noticed Chris Graf posted a piece in Scholarly Kitchen earlier in the week about Reasons to be Cheerful, a good counterbalance to some of the concerns we’re exploring here. I was going to, on a slight tangent, mention the concept of Registered Reports. It’s about peer review prior to data collection and thereby holding the researcher to account to their original thesis. It’s not necessarily applicable to every sort of field of research, but it’s something that we certainly at SAGE are interested in exploring and doing more with with our journals where it’s relevant.

Matt: Do we need specific expertise during peer review? How do we get it?

John: Yes. You probably want a bit more than that. I think it’s very difficult for individual journals. If you’re going to employ someone, it’s quite an expensive thing to do. You probably need about 0.4 of a person per journal, it depends on the journal obviously. So maybe publishers could share that sort of expertise across more than one platform. You could have an independent organisation, independent of journals, but it needs a whole big setup, that would also be expensive. But yes, I think one does need expertise. Coming back to a comment earlier about trust, well, I guess my default is to distrust everybody, it’s just how much do I distrust them? So I’ve got a sliding scale of distrust. So authors I trust the least are those who’ve had retractions for falsified data or fabrication previously, who’ve got expressions of concern notices on their papers, who submit from centres where we have identified falsified data previously. And unfortunately, also countries that don’t have very rigorous research integrity structures that protects the researchers and the patients and so on. So I’ve got this sliding scale that combines various factors about the submitter. If you were going to spend a lot of time, I would tend to use that sliding scale to work out who to who do we spend time looking at, and you might even use it to reject out of hand some submissions without even looking at the data.

Matt: There’s a real risk when we’re talking about using factors to identify things. You can use it to flag things up, but the idea of moving to a post-human AI world where we can just use predictive factors to reject articles is what people are wary of. The Principles of Transparency and Best Practice in Scholarly Publishing have just been updated and include what was a long-standing statement from COPE and other organisations that we need to avoid geopolitics, that background of the authors affecting editorial decisions. It’s a very tricky area.

We’re talking generally about how much peer review can help uphold research integrity, this is the middle bit where it has been submitted to a journal and it’s not published yet. What about the before and the after? Can institutions do more, pre-checking before articles are submitted. Can we do more checking at the preprint stage? And then can the community do a good job with post-publication review, either generally using PubPeer or particular platforms like F1000 Research?

Magda: This is a very important but a very difficult question and identifies a definite gap we have at the moment, where responsibility for peer review actually falls slightly into the gaps. Because on one hand, you have the researchers who are themselves employed at institutions, either producing the research or reviewing the research, so you think, well there’s an obvious role for institutions here, but then you have the publishers that publish it and get money from it. So who has more responsibility for it? Who should be training the peer reviewers and who should be checking the data? I don’t think there’s an obvious answer one way or another. The reason why we are here is because nobody really wants to take responsibility, right? Because of resources. Doing all of this, regardless of who is doing it is a massive undertaking which requires hiring people, training people, and they will be making mistakes. So who’s responsible for their mistakes? And it’s because it’s such a big responsibility, nobody will say “yeah, we can do it”. It’s our responsibility together, possibly with funders, to create a situation where this is possible, but I don’t think it’s a solution that one stakeholder can take on their own.

Caroline: We have to all play a part and we all have to take the steps that we can. Publishers are building research integrity teams, we’ve got organisations such as COPE, EASE, UKRIO, SOPS4RI who are that are all trying to offer guidance, support, best practice. Universities and funders absolutely play a part as well. Where there are opportunities for us to work together, collaboratively, we should take them. This is why webinars, panels, such as this are important, because it is an opportunity for us to cross-collaborate and share because you’re right, no one person, no one institution, no one organisation has the purview of the scholarly record. There isn’t one organisation or stakeholder in this process that is the ultimate arbiter of what content is okay and what content is not okay. So we all have to play a part and for organisations like COPE, they take that role very seriously. I’m really pleased that the types of materials that COPE puts out, not just guidance and materials, it’s also discussions, it’s webinars, it’s forums, I feel there’s a wealth of resources for researchers and universities, in fact, not just publishers for journal editors that are available that we can all make use of in making this work better.

Magda: COPE has now opened up to institutions as members and UCL has joined as a member as well. We are particularly excited about working with COPE and hopefully helping to solve some of those discussions and contribute towards resolving those issues one way or another. I’m very much looking forward to having more of those discussions and trying to bridge that gap that we have around peer review.

Gowry: Perhaps we should focus a little bit more on peer review happening before the end stage of writing up your your study, when the analysis has been done, when the study protocol, the study methods have already been formed. I know that this is something that is going on already. I know that there is already some evidence to show that Registered Reports work. It’s not a perfect system, but we can have peer review happening earlier instead of us only focusing on what do we do with the research that ends up on the editor’s desk. So trying to ask the questions in a preventative way of approaching this by instilling a call from institutions and also from funders of promoting research going through review at each stage at which it’s being done. And I know that PCI Registered Reports does a lot in using their committee of peer reviewers to give a stamp on the research that then different journals have different ways of accepting this or not. We need to have peer review starting a little bit earlier in the research cycle.

Matt: What are the common indicators of fabricated or falsified data in RCTs and what are common red flags that you see for editors and peer reviewers? It’s what my team at BMC called my spidey sense. What sets off your spidey sense during peer review?

John: Trial registration beforehand can be useful, although we’ve had a few papers we’ve identified as having substantial falsified data, where they were pre-registered and the reported methodology matched what they said, but on clinicaltrials.gov I’ll go to the website, I’ll compare the first and the last version, I’ll look at historical changes, I’ll look at the rapidity of recruitment, I’ll look at the number of dropouts, I’ll look at the similarities between that paper and other papers the authors have published. We obviously have plagiarism software, as many other journals do. The ‘unpolitical’ comments I made earlier about where papers come from, I’m afraid it is true that we have identified more falsified data from certain places than others, so I will carry on using that until those countries and those institutions have rigorous processes. So where does this process start? I think it’s only reliably going to start in universities and other research institutions. If our systems are, not quite watertight, but much better than they are at the moment then I think we’ll be in receipt of fewer papers and more of which hopefully will be of high quality. So those are my red flags. Perhaps the simplest ones are numbers in tables and figures.

Magda: A paper that came up quite recently by Lisa Parker and others is how to screen for fake papers, a quite interesting analysis. From my editorial days, I always looked at ethics. Obviously that’s if you’re dealing with a research that has human participants, but that’s often overlooked – if there’s fake research, they usually write it in a way that sounds just a bit dodgy. You read it and are like “what do they mean by that?”. If you have any concerns about ethics, generally, and this applies to animal research as well, because in my experience, when I have concerns about studies and they turn out not to be done well, I had ethical concerns, for example, about how the animals were treated, what was done to them.

Caroline: What you’re talking about is incredibly important, but it’s also resource intensive and it requires such attention to detail and expertise that it’s very hard to scale. A colleague at SAGE has been using technology to try and identify replicated language in peer reviews. I think technology has to be part of the answer here. There are not only obviously plagiarism detection technologies, but also the different types of technology that identify image manipulation as well, which is an increasing issue. That has to go hand-in-hand with human judgement, assessment, and analysis. Whatever way you look at it, it’s labour intensive, and it requires time and resource and expertise. We all have to do our best with that, but it’s never going to be a perfect system.

Matt: Once you’ve got hold of the raw data, never mind the issues of privacy with clinical data, then what do we do with this data? Can we really manually do this? That plays into the question, do we need technology, do we need tools that can supplement or add on to human judgement? So that’s the question that was posed by Tracey Weissgerber and colleagues in a recent paper in BMC Research Notes: is the future of peer review automated?

Magda: More and more will be automated and that’s a good thing, but nothing for now will change the human aspects of looking at things because of what we’ve learned for example with plagiarism. Anyone can now run a plagiarism check very quickly and easily and most journals run it automatically and as well this happens at universities where a lot of theses are run through a similar process, but once you get the report it doesn’t tell you if there’s plagiarism or not. There’s still the human need to actually look at the report to understand whether there are concerns or not. The technology will get better, but the human element of looking at reasonableness – and I know this is terribly unscientific, to think about things that are “reasonable” – but unfortunately, there is the balance of probabilities that things look iffy or not and I feel that there is that element needed of human judgement there. I’m going to posit something that might not be very popular, but I believe that peer review would be better for it if we had professionalised checking of publication ethics, by professional reviewers of publication ethics, and then the scientific peer review could focus on what it is there to do, think about the science of it, because that’s the expertise only those scientists will have. But when it comes to publication ethics, that’s something that can be trained, can be taught, can be specialised in, and those people can learn how to use technology most effectively and also what its limitations are.

John: Computerised algorithms to look at both summary data in papers, and to look at individual patient data, and to look at Western Blots and other plots is definitely the future, but obviously the same technology can be used by people who wish to make up data and so on, and that’s bound to happen. I mean, I can write a computerised programme now that will make sure that if I submitted anything to myself, I would escape my own detection. So it’s not that difficult to do, to make up vast spreadsheets of individual patient data that fulfil most statistical criteria that you might expect. Really the only way is going to be to try and improve the reliability and truth of science at source, so where it’s done rather than once it gets to the journals. We need to improve training and resources at the base level.

Matt: This idea of an arms race has already been seen with plagiarism, so people have realised that it’s generally looking for blocks of text, although there are some that look for ‘bags of words’ as well. People have started to use synonyms, which creates gibberish if they’re not careful. And so now people are looking for those common gibberish synonyms as well. It’s a constant struggle as you put a tool in place, and then people learn how to circumvent it and maybe then give themselves away in the process. There’s going to be lots of unforeseen consequences with the use of tools.

Mentioning unforeseen consequences: we’re being super cautious now, we’re being super skeptical about everything, and we’re not trusting anyone. We’re going for the tinkering approach of science. How do we make sure we don’t unduly go against the revolutions that cause breakthroughs in science?

Caroline: This speaks somewhat to bias in general in peer review and to confirmation bias. More established or well-known scholars get through peer review much more easily, and double-anonymized peer review plays an important part. Ultimately, it’s going to go back to the training of the reviewers, which obviously we’ve talked about being as part of the solution without necessarily presenting the solution in terms of how this is funded and delivered. I also do think we need to talk about the system of incentivization in scholarly publishing and career progression, and the fact that scholars are under such pressure to publish that is creating an exponential increase in outputs, in submissions to journals, which in turn places greater pressure on the peer review ecosystem at large. Some of this needs to be tackled even more at source than by research integrity offices. Why are academics putting their work out and the pressure that they are under that makes them behave either too hastily, or unscrupulously in some cases, to get their work out. It’s not just about the role of peer review in and of itself. It’s also about a much wider system of incentives.

Matt: On the point of more established researchers, there was a recent study that looked at a paper from a Nobel Prize winner and his more junior colleague, and it found that if you gave no name versus the Nobel Prize winner’s name versus the junior colleague, then the Nobel Prize winner got the easier ride in peer review, next most the anonymized version, and finally the junior colleague being named. There was a confounder that it might not just be her being junior, but also gender and she has a name that is non-Western as well. Does this suggest that double-anonymized review might be the best for the integrity of research, even though many people, many journals are now pushing towards transparency and open review as well?

Magda: I think interesting that double-anonymized peer review has been with us for a long time in publishing, it’s been tested and trialled and inevitably it runs into the issue of what’s truly anonymous and this generally what’s anonymous in science is a big question considering data protection laws, but the problem is that many people, rightly or wrongly, think they know who the authors are, because especially in small research fields – this will depend on field, if you’re talking about general molecular biology then who knows – but if you’re talking about specific aspects of molecular biology, maybe the field’s actually not as massive as you’d think. People have tendencies to write in certain way. And as a researcher – I used to be a researcher – there are some papers where I knew who wrote them, which group wrote them, because there is a style to what they do, this topic they develop. I don’t think it necessarily wins everything and always, but it certainly is a good idea. I think that’s why we need to have options. Personally, I’ve always liked open and transparent review. I think transparency is the fairest to everyone involved, but as long as it’s known what the peer review comments say that’s already always a win.

Gowry: With open science and the push to share preprints and also study protocols, it will increasingly be quite difficult to have double-anonymized peer review. Just looking at where the scientific community is moving on, not only in terms of responsible conduct of research, but also how we are going to be rewarding open science practices. The open peer review model would be my favourite choice, given also the trend towards increased transparency, ideally leading to accountability and hopefully also trustworthiness in knowing who said what and why they have said that. That would be that would be my concern: how do we maintain double-anonymized peer review given the whole move towards open science?

John: I’m more than happy as a reviewer to give my name every time I review. If I don’t know who’s submitted the paper, so that side of the anonymization, that to some extent ties my arms about learning about using what I’ve learned already. So knowing who’s publishing your paper, even if I’m not suspecting falsified data, it allows me to build a story of their research projects, this being the most recent addition. Sometimes people will salami slice research projects, etc, etc. And knowing who’s submitted papers can assist in that.

Gowry: On the anonymization of the review, it’s probably also interesting to ask the question, why do we need double anonymization of peer review? What is this bigger problem there? The research just quoted about certain people getting preferential treatment: that is the problem that we need to talk about and address. Double anonymization of peer review is putting a sticking plaster on problem.

Matt: Why do we have biases in the first place?

We can end with a rapid question: what’s the one change you want to make to peer review to help improve research integrity, and what message do you want to get across to people about training and education?

Caroline: There’s so much training and resources and guidance available, it’s to encourage people to use that, a lot of it is free. As somebody who is very focused on diversity, equity, inclusion, and accessibility for both SAGE and COPE, better awareness and training among reviewers, journal editors about those issues, and publishers too, will drastically support peer review and also increased representation across the scholarly ecosystem.

Magda: I would like to see professionalisation of publication ethics review. As a last message, we can all lead in research integrity, no matter where we are within our research lifecycle, whether we’re reviewers or responding to reviewers, how we behave will affect others around us. So if we give them the good example, that will help them to be better themselves. That’s where research integrity principles can help hopefully, even if you don’t have formal training.

John: I’ll stick to type, and I’ll just say: mandatory, raw data.

Gowry: I’ll just stick to training of good and responsible peer review. How can I be a good and responsible peer reviewer? I think that we should train researchers from the get-go.