AI in research

AI in research – resources

- “I can’t help falling in love with AI”: chatbots and research integrity

- Resources list

- Tips for researchers from our 5-day knowledge challenge: Artificial Intelligence and Research Integrity (January 2024)

“I can’t help falling in love with AI”: chatbots and research integrity

Chatbots such as OpenAI’s ChatGPT, the open-source BLOOM, Bing Chat, or Google Bard are exciting. But don’t be fooled, advises our Research Integrity Manager Matt Hodgkinson.

Published: July 25, 2023

![An industrial robot arm with a pen attached to a black ink reservoir. The robot is writing in German using blackletter (Gothic) calligraphy on a paper manuscript page.The installation 'bios [bible]' consists of an industrial robot, which writes down the bible on rolls of paper. The machine draws the calligraphic lines with high precision. Like a monk in the scriptorium it creates step by step the text. http://www.robotlab.de](https://ukrio.org/wp-content/uploads/Robot-writing-by-Mirko-Tobias-Schaefer.jpg)

The explosion of interest in chatbots is unsurprising given that the content they generate is impressive, especially compared to earlier clunky efforts in automated language production. They have passed the Turing Test in a way that ELIZA never could. Although neural networks date back decades, the underlying ‘transformer’ technology that has enabled the new chatbots is only six years old. This is genuinely new ground.

The nuts and bolts

However, these new chatbots are not truly Artificial Intelligence (AI) because they are not intelligent. These large-language models (LLMs) are based on work by creating a network of billions of connections between words or stems of words – known as ‘tokens’ – and weighting those links based on training material, often taken from the web, to suggest the next appropriate token based on a prompt.

Human moderators usually rate the outputs of the raw model on how coherent, convincing, or human-like they sound as a second layer of training, known as Reinforcement Learning from Human Feedback or RHLF. The refined model is then ready for users.

There is generally no explicit knowledge, no anchoring to the real world in the form of curated databases or peer-reviewed outputs (though such ‘knowledge injection’ is possible), and no semantics – the bot doesn’t understand the meaning of what it is saying, even if the reader finds meaning. Like many populist politicians, LLM chatbots produce “humbug” (Harry Frankfurt, who passed away this month, famously popularised a more offensive term that we are too polite to repeat).

Using chatbots in academia

There is no consensus whether to allow the use of LLMs in scholarly work, but if they are used then the authors must take responsibility for everything that is written. A sample corporate policy for their use is available as a guide for organisations, but attempting to detect the use of LLMs (often based on ‘burstiness’ and ‘perplexity’) may be futile as the tools quickly come to better mimic fluent language, and the detectors may produce false positives and discriminate against non-native speakers and people who are neurodivergent.

However, a consensus quickly formed that granting authorship to such tools is not allowed, as agreed by COPE, WAME and the ICMJE, because the tools and their providers cannot take responsibility for the output. Details of the use of LLMs must instead be declared in the Methods and/or Acknowledgements.

LLMs should not be used by peer reviewers or editors, because:

- This often breaches confidentiality. Some platforms, e.g., Duet AI in Google Workspace, retain content and will have people read it. Other tools may not declare this but still do so, e.g., for training material. Submitted content could be leaked or hacked, or content used in training might be closely reproduced if a prompt is specific enough.

- The tools are not validated for the critical appraisal of scholarly content and risk producing inaccurate, cursory, and biased reviews.

- If someone is unable to assess a manuscript, they should decline to review or edit rather than relying on a chatbot.

Just as in academic teaching environments where many students have embraced a new method to reduce their time and effort (like cutting-and-pasting from Wikipedia), many researchers will use these tools, legitimately or not. The profusion of nonsense from paper mills shows an existing appetite. Educators incorporated Wikipedia into teaching; educators and researchers will inevitably incorporate ‘AI’. The Russell Group of universities has outlined principles for academic integrity, listed below, and likewise we need to educate people on the principles and limits of these new tools in research.

- Universities will support students and staff to become AI-literate.

- Staff should be equipped to support students to use generative AI tools effectively and appropriately in their learning experience.

- Universities will adapt teaching and assessment to incorporate the ethical use of generative AI and support equal access.

- Universities will ensure academic rigour and integrity is upheld.

- Universities will work collaboratively to share best practice as the technology and its application in education evolves.

– Russell Group statement, 2023

Risks of using chatbots

If you use a chatbot to create or revise text, know the caveats and risks. Ask yourself why you are using a tool that isn’t validated: you’d (hopefully) not do that in another part of your research process.

You will need to fact-check every statement and find appropriate support in the literature. Many tools invent references and scholarly claims (known as ‘hallucinating’). If a reference exists, you do not know whether the reference supports the claim. You must ensure that the writing is internally consistent, because chatbots can contradict themselves. The less expertise you have on a topic, the more likely it is that you will be fooled by fluent but incorrect outputs.

If you use a chatbot to write about scholarship then it may not give an accurate, up-to-date commentary. Its training set will be out-of-date and could be biased; the text will not come from a thorough search of the latest literature or consider the quality of the evidence.

It might be tempting to use LLMs for rote tasks such as appraising the results of a systematic review, but although LLMs can be asked to summarise information from a specific document or article, the summaries are not always accurate, particularly when there are specific and important facts and figures involved.

The more specialized the prompt, the greater the likelihood that an output may be unoriginal, either in the wording (due to ‘memorizing’ training material) or the ideas expressed. Plagiarism includes using other people’s ideas without appropriate attribution, not only their words. Using an intermediary like a chatbot to launder content and inadvertently commit plagiarism isn’t a defence. If someone else uses the same tool with a similar prompt, it may produce a very similar output for them as it produced for you: you may be accused of plagiarism or outed as having used an LLM if you didn’t declare it.

The models change over time and even stable models include stochasticity. The output may not be reproducible, which may or may not be a concern. More worrying is evidence that the factual accuracy and analytical ability of GPT-4 has worsened over time. As more AI-generated content appears on the web, more will be harvested in training future tools. Unless guarded against, this will degrade their quality, potentially leading to a ‘model collapse’. Malicious actors may also ‘poison’ LLMs by publishing misleading content where they know it will be harvested.

If you come to rely on a tool to write, you may gradually lose your skills in literature search, critical appraisal, summarisation, synthesis, and writing fluently in prose. Your audience may also not welcome the use of an LLM, even when disclosed, if they are expecting a genuine, human, view.

Ethics and principles

There are additional ethical issues outside scholarship to consider. The data centres and processing needed for LLMs use large amounts of energy. Is their use appropriate when we need to reduce carbon emissions to limit the effects of the climate crisis? Further, the RLHF step usually involves low-paid workers, often from low-income countries. Lastly, the training of many LLMs relies on copyrighted material used without permission.

- Safety, security and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress

The regulatory framework for AI is in flux, but consider the UK government’s five principles, listed above, before implementing any process that uses automated tools.

Fools rush in

The tech sector is prone to hype, as we saw recently with NFTs and the rest of Web3 and the metaverse. Keep your head above your heart and don’t rush to use automated tools in your everyday scholarship. Focus on tools such as those designed and tested for the structured appraisal of scholarly publications and in research assessment, rather than trendy but unreliable chatbots.

Though chatbots are new, longstanding principles of good research practice can be applied to help navigate the challenges that they pose. As part of the independent and expert advice and guidance we provide to the research community and our subscriber organisations, UKRIO is considering how best to address the issues discussed in this article. We’ll be doing more on AI in research in the future and will keep you posted.

Disclosure: None of this text was produced using an LLM or another writing tool, other than Microsoft Word. A garbled sentence in the second paragraph was edited on 11 August 2023 after errors were noted by a reader.

Resources list

📰 – News

💡 – Opinion

🔬 – Research

⭐ – Review

Russell Group, 2023. https://russellgroup.ac.uk/news/new-principles-on-use-of-ai-in-education/

Davies, M., & Birtwistle, M. (2023). Regulating AI in the UK: Strengthening the UK’s proposals for the benefit of people and society. Ada Lovelace Institute. https://www.adalovelaceinstitute.org/report/regulating-ai-in-the-uk/

⭐ Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜. FAccT ’21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 610–623 https://doi.org/10.1145/3442188.3445922

https://publicationethics.org/cope-position-statements/ai-author

Artificial intelligence (AI) in decision making. COPE, 2021 https://doi.org/10.24318/9kvAgrnJ

Chatbots, Generative AI, and Scholarly Manuscripts. WAME. https://wame.org/page3.php?id=106

Alfonso, F, & Crea, F. (2023). New recommendations of the International Committee of Medical Journal Editors: use of artificial intelligence. European Heart Journal, ehad448, https://doi.org/10.1093/eurheartj/ehad448

https://github.com/KairoiAI/Resources/blob/main/Template-ChatGPT-policy.md

💡 Brean, A. (2023). From ELIZA to ChatGPT. Tidsskr Nor Legeforen. https://doi.org/10.4045/tidsskr.23.0279

🔬 Liesenfeld, A., Lopez, A., & Dingemanse, M. (2023). Opening up ChatGPT: Tracking openness, transparency, and accountability in instruction-tuned text generators. arXiv:2307.05532 [cs.CL] https://doi.org/10.48550/arXiv.2307.05532

⭐ Lipenkova, J. (2023). Overcoming the Limitations of Large Language Models: How to enhance LLMs with human-like cognitive skills. Towards Data Science. https://towardsdatascience.com/overcoming-the-limitations-of-large-language-models-9d4e92ad9823

💡 Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. Patterns, 4(7):100779. https://doi.org/10.1016/j.patter.2023.100779

💡 Harrer, S. (2023). Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. eBioMedicine, 90, 104512 https://doi.org/10.1016/j.ebiom.2023.104512

🔬 Gravel, J., D’Amours-Gravel, M., & Osmanlliu, E. (2023). Learning to Fake It: Limited Responses and Fabricated References Provided by ChatGPT for Medical Questions. Mayo Clinic Proceedings: Digital Health, 1(3):226-234. https://doi.org/10.1016/j.mcpdig.2023.05.004

🔬 Chen, L., Zaharia, M., & Zou, J.. (2023). How is ChatGPT’s behavior changing over time? arXiv:2307.09009 [cs.CL] https://doi.org/10.48550/arXiv.2307.09009

🔬 Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., & Anderson, R. (2023). The Curse of Recursion: Training on Generated Data Makes Models Forget. arXiv:2305.17493 [cs.LG] https://doi.org/10.48550/arXiv.2305.17493

📰 Kaiser, J. (2023). Science funding agencies say no to using AI for peer review. Science Insider. https://www.science.org/content/article/science-funding-agencies-say-no-using-ai-peer-review

⭐ Hosseini, M., & Horbach, S. P. J. M. (2023). Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other large language models in scholarly peer review. Res Integr Peer Rev 8(4) https://doi.org/10.1186/s41073-023-00133-5

🔬 Checco, A., Bracciale, L., Loreti, P., Pinfield, S., & Bianchi, G. (2021). AI-assisted peer review. Humanit Soc Sci Commun 8(25). https://doi.org/10.1057/s41599-020-00703-8

💡 Schulz, R., Barnett, A., Bernard, R., Brown, N. J. L., Byrne, J. A., Eckmann, P., Gazda, M. A., Kilicoglu, H., Prager, E. M, Salholz-Hillel, M., Ter Riet, G., Vines, T., Vorland, C. J., Zhuang, H., Bandrowski, A., & Weissgerber, T. L. (2022). Is the future of peer review automated?. BMC Res Notes 15: 203. https://doi.org/10.1186/s13104-022-06080-6

⭐ Kousha, K., & Thelwall, M. (2022). Artificial intelligence technologies to support research assessment: A review. arXiv:2212.06574 [cs.DL] https://doi.org/10.48550/arXiv.2212.06574

📰 Grove, J (2023). The ChatGPT revolution of academic research has begun. Times Higher Education. https://www.timeshighereducation.com/depth/chatgpt-revolution-academic-research-has-begun (🔒)

📰 Conroy, J. (2023). Scientists used ChatGPT to generate an entire paper from scratch — but is it any good? Nature, 619, 443-444 https://doi.org/10.1038/d41586-023-02218-z

Use of generative Artificial Intelligence in PGR programmes. University of York Graduate Research School, 2023. https://www.york.ac.uk/research/graduate-school/research-integrity/ai/

💡 Dwivedi, Y. K. et al. (2023). “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. International Journal of Information Management, 71: 102642 https://doi.org/10.1016/j.ijinfomgt.2023.102642

💡 Berg, Chris. (2023). The Case for Generative AI in Scholarly Practice. SSRN. http://dx.doi.org/10.2139/ssrn.4407587

💡 Hosseini, M., Resnik, D. B., & Holmes, K. (2023). The ethics of disclosing the use of artificial intelligence tools in writing scholarly manuscripts. Research Ethics. https://doi.org/10.1177/17470161231180449

💡 Hosseini, M., Rasmussen, L. M., & Resnik, D. B. (2023). Using AI to write scholarly publications. Accountability in Research. https://doi.org/10.1080/08989621.2023.2168535

Generative AI in Scholarly Communications: Ethical and Practical Guidelines for the Use of Generative AI in the Publication Process. STM Association, 2023. https://www.stm-assoc.org/new-white-paper-launch-generative-ai-in-scholarly-communications/

🦮 Guidance for generative AI in education and research. UNESCO, 2023. https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research

💡 Kolodkin-Gal, D. (2024). AI and the Future of Image Integrity in Scientific Publishing. Science Editor. https://doi.org/10.36591/SE-4701-02

Five tips for researchers

Taken from our 5-day knowledge challenge: Artificial Intelligence and Research Integrity (January 2024)

1) Say no to AI for peer review

Don’t get a helping hand from AI when peer-reviewing papers or grants!

Image ref: Canva

AI tools such as large-language models (LLMs), including OpenAI’s ChatGPT, the open-source BLOOM, Bing Chat, or Google Bard, should not be used by peer reviewers (both for publications or grants) or editors because:

- This often breaches confidentiality. Some platforms, e.g., Duet AI in Google Workspace, retain content and will allow people to read it. Other tools may not declare this but still do so, e.g., for training material. Submitted content could be leaked, hacked, or closely reproduced if a prompt is specific enough.

- The tools are not validated to critically appraise scholarly content and risk producing inaccurate, cursory, and biased reviews.

- If someone cannot assess a manuscript, they should decline to review or edit rather than relying on a chatbot.

2) Say no to AI being an author

LLMs can’t be an author.

Image reference: Canva

Organisations such as COPE and WAME agree that large-language models (LLMs) should not be granted authorship because these tools and their providers cannot take responsibility for the output. Details of the use of LLMs must instead be declared in the Methods and/or Acknowledgements.

Likewise, AI-generated images and videos are now not accepted by Nature unless it is specifically for an AI journal.

Where AI tools are used, for example in preparing funding applications or generating or substantially altering research content, their use must be cited and acknowledged.

The University of Liverpool has a useful guide on how to appropriately reference AI use.

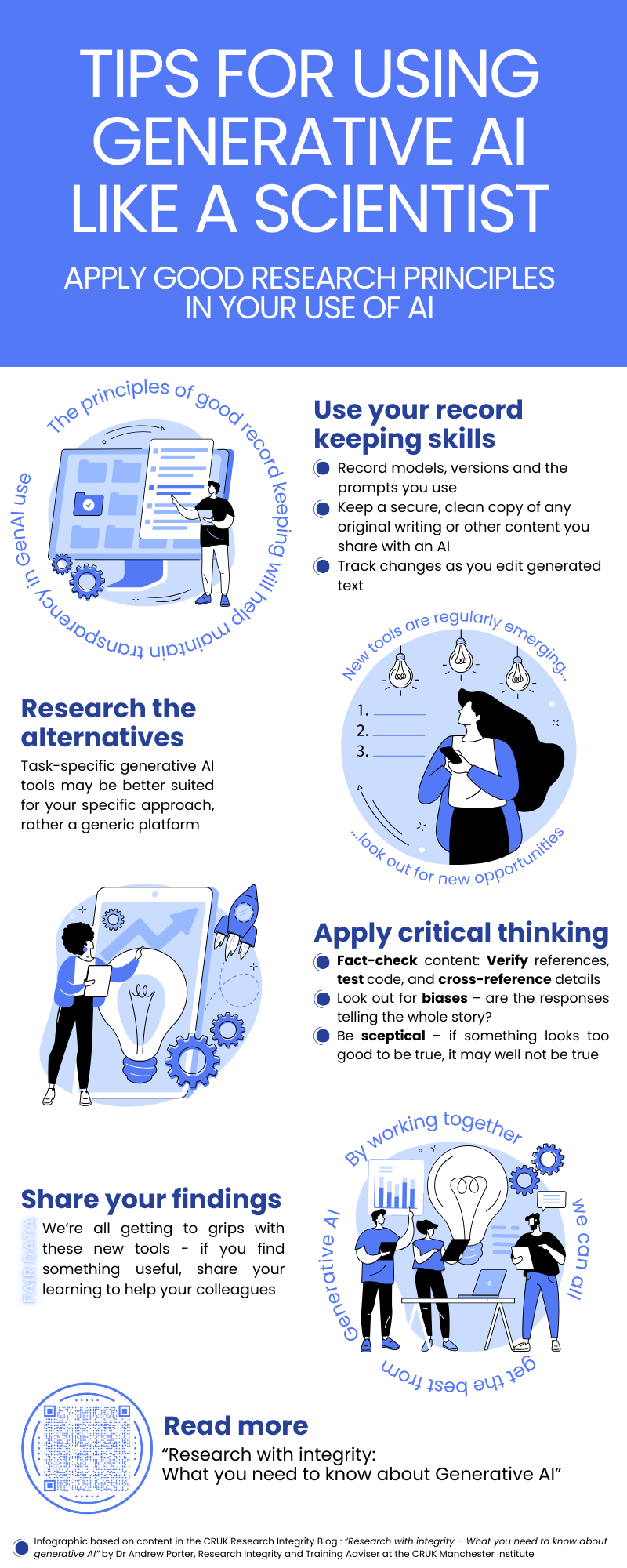

3) Apply good research practice and be aware of breaches

Infographic created by Dr Andrew Porter, Research Integrity and Training Adviser, CRUK Manchester Institute

Approach generative AI with the same care as you would with any other research tool. Look at this useful infographic with tips for using generative AI. Note the importance of record keeping no matter your discipline.

Be aware of the risks of inadvertent breaches to good research practice when using generative AI.

Serious intentional breaches, research misconduct, could include plagiarism if you do not reference generative AI as the source or misrepresentation if you present generative AI-produced research as your own.

4) Seek guidance

Seek out new guidance on AI use relevant to your research.

Image ref: Canva

Actively look for new guidance that is relevant to your field and that your employer produces or endorses. This is an ever-changing field with far-reaching implications for society, so it is imperative that individual researchers actively seek and understand what current best practice looks like for their research when using generative AI and other automated tools.

For example:

5) Discuss the issues

Be part of the conversation in determining ethical and responsible use of AI in research.

Image ref: Canva

Be part of the conversation in shaping guidance for ethical and responsible use of AI in research.

Get involved:

- Turing trustworthy AI forum at the Alan Turing Institute

Last revised January 2024.

Please note that this list of resources is not intended to be exhaustive and should not be seen as a substitute for advice from suitably qualified persons. UKRIO is not responsible for the content of external websites linked to from this page. If you would like to seek advice from UKRIO, information on our role and remit and on how to contact us is available here.